Data Pipelines in Microsoft Fabric

Data Pipelines in Microsoft Fabric

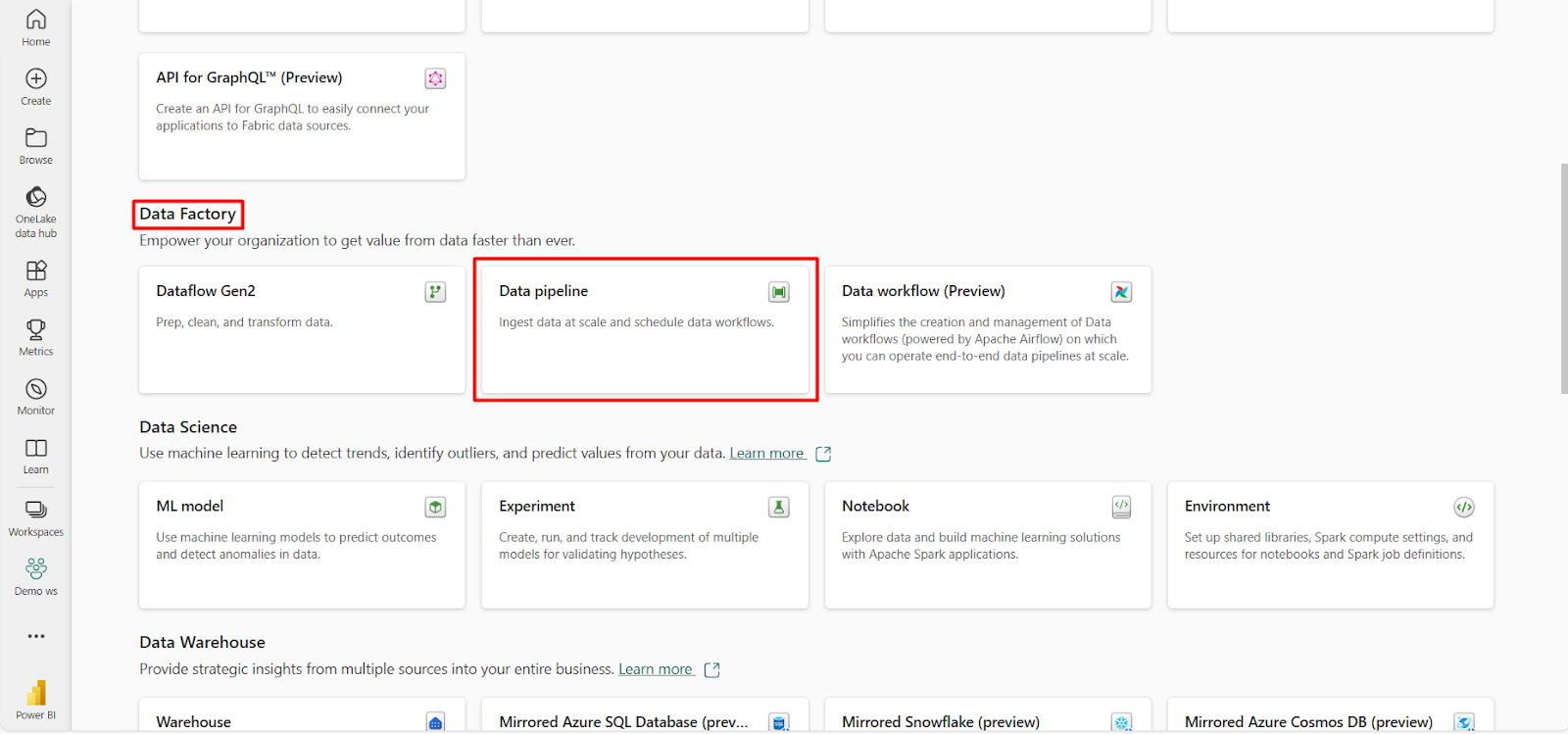

Microsoft Fabric offers a range of features to elevate your data analytics experience, including the Data Pipeline, built from Azure Data Factory. Even if you're used to working with Power Query Dataflows, the addition of Data Pipelines brings substantial value and enriches the overall experience. In this piece, we will delve into what a Data Pipeline entails within Microsoft Fabric Data Factory and present a straightforward example to demonstrate its application.

What is a Data Pipeline or Data Factory Pipeline?

A Data Pipeline or Data Factory Pipeline, originating from Azure Data Factory, is essentially a set of activities organized into a workflow. For instance, you could design a process that entails running a Dataflow repeatedly until a specific condition is satisfied. Based on the outcome of each iteration, you can automate tasks such as sending an email, copying data, or executing a stored procedure.

If you're acquainted with SQL Server Integration Services (SSIS), you can think of the Data Pipeline as the Control Flow and the Dataflow as the Data Flow.

While Data Pipelines have similarities with Power Automate, they provide capabilities that are not easily attainable in Power Automate. Specifically designed for Data Factory, Data Pipelines enable large-scale data movement and encompass activities like Dataflow Gen2, data deletion, and the execution of Fabric Notebook or Spark job definitions.

Data Factory with Transformation Engine of Dataflow

The integration of Data Pipelines with Dataflow's transformation engine is a significant advantage. Previously, Data Factory was mainly used for data ingestion and, although it could handle transformations using SSIS, it lacked the simplicity of Power Query transformations. However, in Microsoft Fabric, it is now possible to create a Data Factory Pipeline that incorporates a Dataflow Gen2 as one of its activities, in addition to other tasks.

Data Pipeline vs. Dataflow

I'm sure you're curious about the distinction between a Data Pipeline and a Dataflow. They serve different purposes, and it's important to understand their roles.

When it comes to data transformation, Dataflows take the lead. They use Power Query to shape and format data from the source to the destination. On the other hand, Data Pipelines are responsible for managing the execution flow. They use control flow activities like loops and conditions to orchestrate a larger ETL process.

Summary

Yet another extremely significant component of Microsoft Fabric Data Factory is the Data Pipelines. These play a vital role in managing the control flow of execution, complementing the functions of Dataflows which primarily focus on data transformations. Data Pipelines main function involves orchestration of these transformations within a larger ETL (Extract, Transform, Load) process. Offering capabilities for looping, conditional execution, and integration of various data-related tasks, Data Pipelines are an indispensable part of executing complex data workflows. Keep an eye out for more in-depth articles on the functionalities and features of Data Pipelines

ReplyDeleteNice Blog Article.Thanks for sharing the information.

Very Informative Post .Thanks for Sharing

Microsoft Fabric Training

Microsoft Azure Fabric Training

Microsoft Fabric Online Training

Microsoft Fabric Course in Hyderabad

Microsoft Fabric Training In Ameerpet

Microsoft Fabric Online Training Course

Microsoft Fabric Training In Hyderabad

Microsoft Fabric Online Training Institute